The Covid-19 pandemic has catapulted one mysterious data website to prominence, sowing confusion in international rankings

MADRID — On April 28, Spanish Prime Minister Pedro Sánchez stood alone on the stage of a bright but empty briefing room. As a CNN reporter asked a question via video link, the prime minister looked deep in concentration, scribbling notes and pausing to look at the monitor only once. As he launched into his answer, he looked directly into the camera to boast about Spain’s Covid-19 testing volume.

“We are one of the countries with the highest number of tests carried out,” Sánchez said.

Initially, the prime minister cited data from a recent Organization for Economic Cooperation and Development (OECD) ranking that had placed Spain eighth in Covid-19 testing among its members.

“Today,” he added, “we have found out about another study, from the Johns Hopkins University, that […] ranks us fifth in the world in total tests carried out.”

There were just two problems: The OECD data had been wrong. And while some sources had ranked Spain fifth in total testing volume, Johns Hopkins was not one of them; the study Sánchez cited does not exist.

Yet two weeks later, the Spanish government is standing by the substance of its prime minister’s claim. Instead of citing Johns Hopkins, Spanish officials are now pointing to testing rankings from a data aggregation website called Worldometer — one of the sources behind the university’s widely cited coronavirus dashboard — and prompting questions about why some governments and respected institutions have chosen to trust a source about which little is known.

What is Worldometer?

Before the pandemic, Worldometer was best known for its “counters,” which provided live estimates of numbers like the world’s population or the number of cars produced this year. Its website indicates that revenue comes from advertising and licensing its counters. The Covid-19 crisis has undoubtedly boosted the website’s popularity. It’s one of the top-ranking Google search results for coronavirus stats. In the past six months, Worldometer’s pages have been shared about 2.5 million times — up from just 65 shares in the first six months of 2019, according to statistics provided by BuzzSumo, a company that tracks social media engagement and provides insights into content.

Questioning the reliability of this coronavirus statistics site 03:36

The website claims to be “run by an international team of developers, researchers, and volunteers” and “published by a small and independent digital media company based in the United States.”

But public records show little evidence of a company that employs a multilingual team of analysts and researchers. It’s not clear whether the company has paid staff vetting its data for accuracy or whether it relies solely on automation and crowdsourcing. The site does have at least one job posting, from October, seeking a volunteer web developer.

Once known as Worldometers, the website was originally created in 2004 by Andrey Alimetov, then a 20-year-old recent immigrant from Russia who had just gotten his first IT job in New York.

“It’s a super simple website, there is nothing crazy about it,” he recently told CNN.

Within about a year, Alimetov said, the site was getting 20,000 or 30,000 visits every day but costing him too much money in web-hosting fees.

“There was no immediate fast way to cash out,” he said, so he listed the site on eBay and sold it for $2,000 sometime in 2005 or 2006.

Changing hands

When Reddit’s homepage featured Worldometer in 2013, its founder emailed the new owner, a man named Dario, to congratulate him. Dario said he bought the site to drive traffic to his other websites.

Source: Andrey Alimetov

When Reddit’s homepage featured his old site in 2013, Alimetov emailed the buyer, a man named Dario, to congratulate him.

In his reply, Dario said he bought the site to drive traffic to his other websites.

As those businesses “started to decline, I decided to invest on Worldometers, bringing in resources and people until eventually it took a course of its own,” Dario wrote.

Worldometer no longer bears its trailing “s” except in its URL. Beyond that, not much has changed.

Today, the Worldometer website is owned by a company called Dadax LLC.

Representatives for Worldometer and Dadax did not respond to CNN’s requests for interviews, but state business filings show Dadax was first formed in Delaware, in 2002. The filing lists a PO box as the company’s address. From 2003 to 2015, business filings in Connecticut and New Jersey listed Dadax’s president as Dario Pasqualino. Addresses on the filings tied the company and Pasqualino to homes in Princeton, New Jersey, and Greenwich, Connecticut. The company is still actively registered in Delaware and has been in good standing since 2010.

The company shares the Dadax name with a Shanghai-based software firm. In March, both companies issued statements denying a connection. The Chinese Dadax said it issued its statement after receiving “many calls and emails” about the stats site. Worldometer, in a tweet, said it’s never had “any type of affiliation with any entity based in China.”

IDs in the source code for Worldometer and the US Dadax’s websites link them to at least two dozen other websites that appear to share ownership. Some appear to be defunct. Others, such as usalivestats.com, italiaora.org and stopthehunger.com, share the same premise: live stats counters. Most of the sites have a rudimentary aesthetic, reminiscent of a 1990s or early 2000s internet. Some seem quite random. One Italian site displays Christmas poems and gift suggestions, like a bonsai plant (for her), or a plot of land on the moon (for him). Another site is dedicated to Sicilian puppet shows.

A person with Pasqualino’s name and birthday is also registered as a sole proprietor in Italy. That business manages and sells “advertising space,” according to an Italian registration document filed last year. Its address leads to a tidy, three-story apartment building on a leafy street in an upscale neighborhood in Bologna.

CNN was unable to reach Pasqualino through contact information listed on Worldometer and in public records.

According to Worldometer’s website, its Covid-19 data comes from a multilingual team that “monitors press briefings’ live streams throughout the day” and through crowdsourcing.

Visitors can report new Covid-19 numbers and data sources to the website – no name or email address required. A “team of analysts and researchers” validate the data, the website says. It may, at first, sound like the Wikipedia of the data world, but some Wikipedia editors have decided to avoid Worldometer as a source for Covid-19 data.

“Several updates lack a source, do not match their cited source or contain errors,” one editor, posting under the username MarioGom, wrote on a discussion page for Wikipedia editors working on Covid-19-related content last month. “Some errors are small and temporary, but some are relatively big and never corrected.”

The editor, whose real name is Mario Gómez, told CNN in an email, “Instead of trying to use a consistent criteria, [Worldometer] seems to be going for the highest figure. They have a system for users to report higher figures, but so far I failed to use it to report that some figure is erroneous and should be lower.”

Edouard Mathieu, the data manager for Our World in Data (OWID), an independent statistics website headquartered at Oxford University, has seen a similar trend.

“Their main focus seems to be having the latest number wherever it comes from, whether it’s reliable or not, whether it’s well-sourced or not,” he said. “We think people should be wary, especially media, policy-makers and decision-makers. This data is not as accurate as they think it is.”

Virginia Pitzer, a Yale University epidemiologist focused on modeling Covid-19’s spread in the United States, said she’d never heard of Worldometer. CNN asked her to assess the website’s reliability.

“I think the Worldometer site is legitimate,” she wrote via email, explaining that many of its sources appear to be credible government websites. But she also found flaws, inconsistencies and an apparent lack of expert curation. “The interpretation of the data is lacking,” she wrote, explaining that she found the data on active cases “particularly problematic” because data on recoveries is not consistently reported.

Pitzer also found few detailed explanations of data reporting issues or discrepancies. For Spain, it’s a single sentence. For many other countries, there are no explanations at all.

She also found errors. In the Spanish data, for instance, Worldometer reports more than 18,000 recoveries on April 24. The Spanish government reported 3,105 recoveries that day.

Accuracy, fairness and apples-to-apples comparisons

When Spanish Prime Minister Pedro Sánchez boasted of Spain’s high rankings, he didn’t pull his numbers out of thin air. On April 27, the OECD wrongly ranked Spain eighth in testing per capita. Initially, the OECD had used data from OWID to compile its statistics. But it sourced the Spanish numbers independently because OWID’s data was incomplete. The mixed sourcing skewed Spain’s position in the ranking because it counted a broader category of tests than the other countries’ numbers. The organization corrected itself the next day, two hours before Sánchez’s press conference, bumping Spain to 17th place.

In its statement, the OECD said “we regret the confusion created on a sensitive issue by any debate on methodological issues” and stressed that increasing the availability of testing in general is more important than knowing where any particular country ranks.

Sánchez’s later reference to a Johns Hopkins study, in which he said Spain ranked fifth for testing worldwide, appears to have been a case of mixed-up attribution by the prime minister. JHU has not published international testing figures. Jill Rosen, a spokeswoman for the school, told CNN the university couldn’t identify a report that matched Sánchez’s description.

At a press conference on May 9, Sánchez evaded a CNN question pressing him on the JHU study’s existence and listed the government’s numbers on testing totals instead. In comments made to a Spanish reporter the next day, health minister Salvador Illa continued to insist the testing data had been released by JHU, though he pointed to Worldometer as the underlying source. Since Johns Hopkins gets its data from Worldometer, he argued, it’s just as good.

“It is data given by the John Hopkins University […] taken from as a fundamental source of information, the website Worldometer,” Illa said. “You can check it.”

It is true that on April 28, Worldometer’s data had ranked Spain fifth when it came to total testing volume. At the time, OWID data also ranked Spain fifth, but as more countries began reporting larger testing volumes, it became clear how Worldometer’s data is flawed. Its Spain figure includes both polymerase chain reaction (PCR) tests, which show if patients are currently infected, and antibody tests, which indicate if patients were ever infected. For most countries besides Spain, Worldometer's data appears to only count PCR tests.

Because so few countries report antibodies testing data and to ensure an apples-to-apples comparison, OWID says it only tracks PCR tests. By that measure, as of May 17, Spain ranks sixth, behind the US, Russia, Germany, Italy and India. Worldometer ranked Spain fourth.

But relying on the ranking by the raw number of tests performed is still misleading because it doesn’t account for population differences between countries.

OWID’s data manager, Edouard Mathieu, says a much fairer way to compare testing data is to account for population size. As of May 10, OWID placed Spain 19th in testing per 1,000 people. Worldometer placed Spain 15th by a similar measure.

Tale of two rankings

Worldometer’s data does rank Spain fifth in terms of total testing volume. But relying on raw numbers is misleading because it doesn’t account for differences between countries. When adjusted for population, Spain’s ranking falls to 16th. Experts say this data, from Worldometer, is further flawed beacuse its Spain figure counts a broader category of tests than most other countries’.

Top 20 countries by…

Total tests performed

Tests performed per 1 million residents

Source: Worldometer

Roberto Rodríguez Aramayo, a research professor at the Spanish National Research Council (CSIC)’s Institute of Philosophy and a former president of a Spanish ethics association, said Spain is reporting data from both the most and least reliable types of tests.

“Unfortunately, there seems to be certain [political] interests in the readings that are given of these data, when they are shown,” he said.

What does Worldometer have to do with Johns Hopkins University?

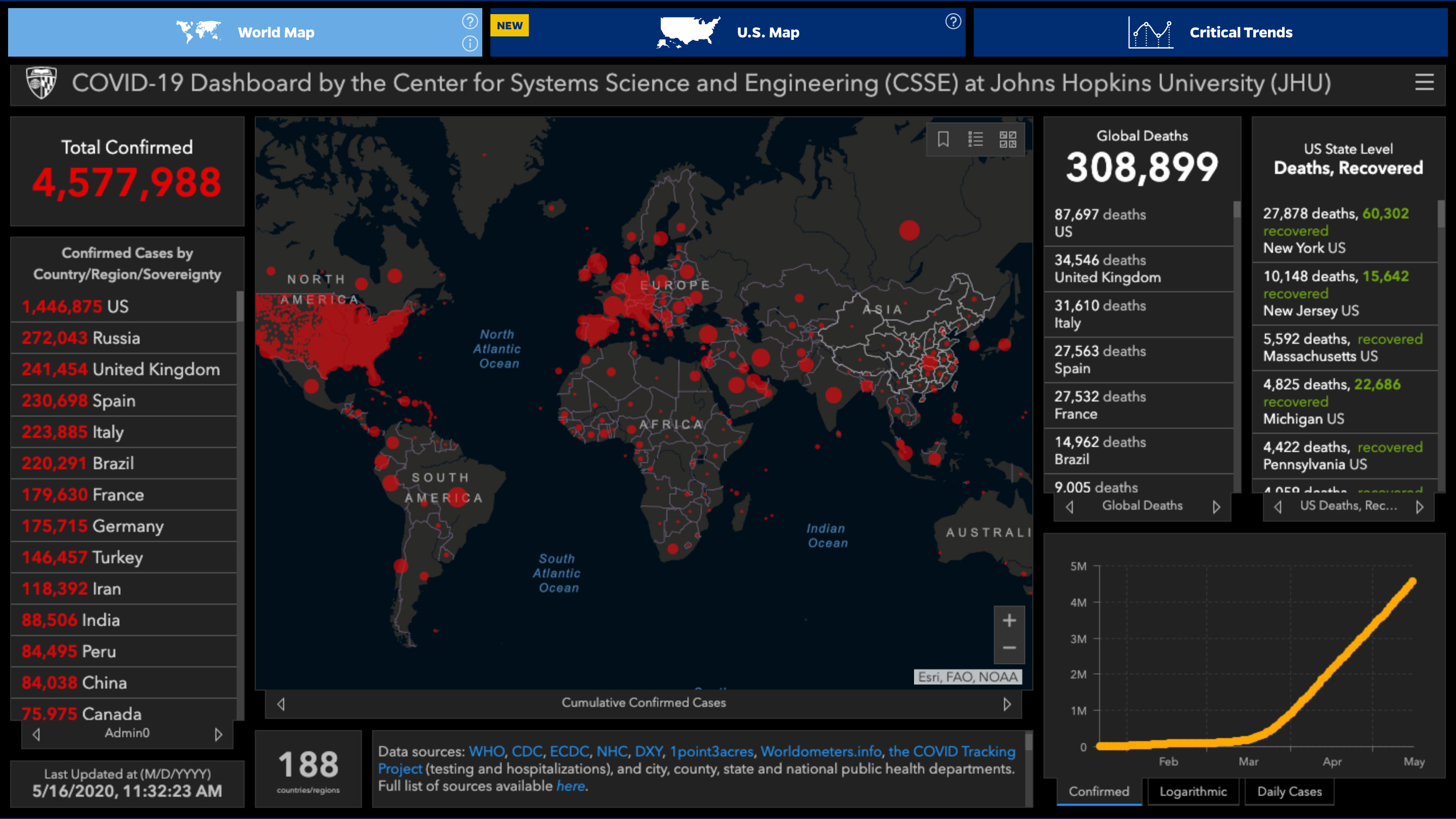

Johns Hopkins has not published international data on Covid-19 testing, but it does list Worldometer as one of several sources for its widely-cited coronavirus dashboard.

The university has declined to say what specific data points it relies on Worldometer for, but issues with the counter site’s data have caused at least one notable error.

On April 8, JHU’s global tally of confirmed Covid-19 cases briefly crossed 1.5 million before dropping by more than 30,000. Johns Hopkins later posted an explanation for the incident on its GitHub page. At the time, JHU told CNN the error appeared to come from a double counting of French nursing home cases. But French officials told CNN there had been no revision, not even to nursing home data. Johns Hopkins’ data appeared to come directly from Worldometer. The website listed no source for its figure.

One Wikipedia editor, James Heilman, a clinical assistant professor of emergency medicine at the University of British Columbia, said Wikipedia volunteers have noticed persistent errors with Worldometer, but also with “a more reputable name with a longer history of accuracy,” referring to Johns Hopkins. “We hope they also double check the numbers.”

Johns Hopkins’ response

Read Johns Hopkins University’s full response to CNN’s questions for this story.

Source: Johns Hopkins University

In an article published in February, JHU said it began manually tracking Covid-19 data for its dashboard in January. When that became unsustainable, the university began scraping data from primary sources and aggregation websites. Lauren Gardner, the associate engineering professor who runs the university’s Covid-19 dashboard, told CNN in a statement that the university uses a “two-stage anomaly detection system” to catch potential data problems. “Moderate” changes are automatically added to the dashboard but flagged so staff can double-check them in real time. Changes beyond a certain threshold require “a human to manually check and approve the values before publication to the dashboard,” Gardner said.

The university’s reliance on Worldometer has surprised some academics.

Phil Beaver, a data scientist at the University of Denver, seemed at a loss for words when he was asked what he thought of JHU citing Worldometer.

“I am not sure, that is a great question, I kind of got the impression that Worldometer was relying on [Johns] Hopkins,” he told CNN after a lengthy pause.

Mathieu also seemed taken aback.

“I think JHU has been under a lot of pressure to update their numbers,” he said. “Because of this pressure they have been forced to or incentivized to get data from places that they shouldn’t have, but in general I would expect JHU to be a fairly reliable source.”

In the university’s response to CNN, Gardner said Worldometer was one of “dozens” of sources and that “before incorporating any new source, we validate their data by comparing it against other references.”

“We try not to use a single source for any of our data,” Gardner added. “We use reporting from public health agencies and sources of aggregation to cross-validate numbers.”

The Spanish government and Johns Hopkins are not alone in citing Worldometer. The website has been cited by Financial Times, The New York Times, The Washington Post, Fox News and CNN.

The British government cited Worldometer data on Covid-19 deaths during its daily press conferences for much of April, before switching to Johns Hopkins data.

“Both Worldometers and John Hopkins provided comprehensive and well-respected data. As the situation developed, we transferred from Worldometers to John Hopkins as John Hopkins relies more on official sources,” read a statement from a UK government spokesperson.

‘Contaminating public opinion’

In Spain, Sánchez’s apparent Johns Hopkins misattribution has become a major controversy. In parliament on Wednesday, center-right People’s Party MP Cayetana Alvarez de Toledo called the government the party “of the lies to CNN and the Spanish people.”

On May 10, a spokeswoman for Spain’s embassy in London complained to CNN about its coverage of the matter.

“Back in April Mr. Sánchez mentioned analysis of statistical data carried out by Johns Hopkins University that are based upon data published by Worldometer,” the spokeswoman wrote in an email sent to the network’s diplomatic editor just after 4 a.m.

“Even if Mr. Sánchez did not mention Worldometer as a primary source in his remarks, [CNN] could have known that most of the comparisons and analysis on Covid-19 in the world use [Worldometer’s] tables.”

In remarks to CNN, a spokesperson for the prime minister’s office acknowledged that Worldometer counts PCR tests and antibodies tests together and rejected critics’ call to adjust testing numbers for population, calling it a “trap that the OECD and the Spanish press […] has fallen into” and arguing that Spain should not be compared with small countries like Malta, Luxembourg or Bahrain.

It’s not clear, though, why the Spanish government continues to insist that the testing data published by Worldometer was put out by Johns Hopkins University.

Its refusal to acknowledge its attribution error comes just a month after Spain’s Justice Minister Juan Carlos Campo said the government was considering changes to the law, seeking to crack down on those peddling misinformation.

"I believe, it is more than justified -- with the calm, tranquility needed for any legal changes -- that we review what our legal instruments are to stop those contaminating public opinion in a serious and unjustified way," Campo said.

At the time, Human Rights Watch executive director Kenneth Roth told CNN in an email, “If the justice minister is suggesting penalizing speech that contaminates public opinion, that would be very dangerous.”

“Turning the government into a censor would undermine that public accountability precisely at the moment when it is most needed,” Roth warned.

In her letter to CNN, the embassy spokeswoman was emphatic that Spain was — and still is — fifth in the world in Covid-19 testing, attaching screenshots of Worldometer tables as proof.

“Figures speak louder than words,” she wrote. “And not willing to acknowledge the truth of reality […] is very worrying, to say the least.”

This story is based on reporting from Scott McLean in London and Madrid, Laura Perez Maestro in Madrid, Sergio Hernandez in New York and Gianluca Mezzofiore and Katie Polglase in London. Ingrid Formanek in Spain, Sebastian Shukla in London and Mia Alberti in Lisbon contributed to this report.

Correction: This story has been updated to correct the spelling of Lauren Gardner’s name.