New York (CNN Business)Not only is Alexa listening when you speak to an Echo smart speaker, an Amazon employee is potentially listening, too.

Amazon (AMZN) employs a global team that transcribes the voice commands captured after the wake word is detected and feeds them back into the software to help improve Alexa's grasp of human speech so it can respond more efficiently in the future, Bloomberg reports.

Amazon reportedly employs thousands of full-time workers and contractors in several countries, including the United States, Costa Rica and Romania, to listen to as many as 1,000 audio clips in shifts that last up to nine hours. The audio clips they listen to were described as "mundane" and even sometimes "possibly criminal," including listening to a potential sexual assault.

In a response to the story, Amazon confirmed to CNN Business that it hires people to listen to what customers say to Alexa. But Amazon said it takes "security and privacy of our customers' personal information seriously." The company said it only annotates an "extremely small number of interactions from a random set of customers."

The report said Amazon doesn't "explicitly" tell Alexa users that it employs people to listen to the recordings. Amazon said in its frequently asked question section that it uses "requests to Alexa to train our speech recognition and natural language understanding systems."

People can opt out of Amazon using their voice recordings to improve the software in the privacy settings section of the Alexa app.

Bloomberg said that Alexa auditors don't have access to the customers' full name or address, but do have the device's serial number and the Amazon account number associated with the device.

"Employees do not have direct access to information that can identify the person or account as part of this workflow," an Amazon spokesperson told CNN Business. "While all information is treated with high confidentiality and we use multi-factor authentication to restrict access, service encryption, and audits of our control environment to protect it, customers can always delete their utterances at any time."

An Amazon spokesperson clarified that no audio is stored unless the Alexa-enabled device is activated by a wake word.

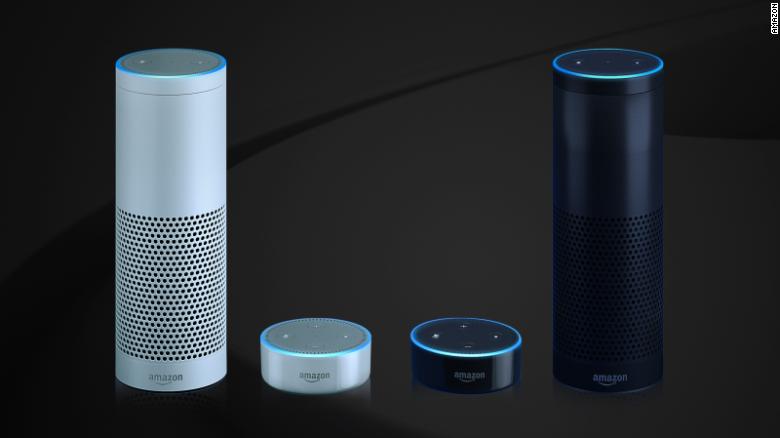

"By default, Echo devices are designed to detect only your chosen wake word," a company spokesperson said. "The device detects the wake word by identifying acoustic patterns that match the wake word."

Amazon previously has been embroiled in controversy for privacy concerns regarding Alexa. Last year, an Echo user said the smart speaker had recorded a conversation without them knowing and sent the audio file to an Amazon employee in Seattle. Amazon confirmed the error and said the device's always-listening microphones misheard a series of words and mistakenly sent a voice message.