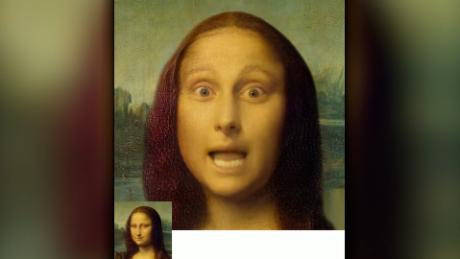

San Francisco (CNN)Deepfake videos are quickly becoming a problem, but there has been much debate about just how big the problem really is. One company is now trying to put a number on it.

There are at least 14,678 deepfake videos ŌĆö and counting ŌĆö on the internet, according to a recent tally by a startup that builds technology to spot this kind of AI-manipulated content. And nearly all of them are porn.

The number of deepfake videos is 84% higher than it was last December when Amsterdam-based Deeptrace found 7,964 deepfake videos during its first online count. The company conducted this more recent count in June and July, and the number has certainly grown since then, too.

While much of the coverage about deepfakes has focused on its potential to be a tool for information warfare in politics, the Deeptrace findings show the more immediate issue is porn. In the report, released Monday, Deeptrace said 96% of the deepfakes spotted consisted of pornographic content, and all of that pornographic content featured women.

Deeptrace CEO and chief scientist Giorgio Patrini told CNN Business that the growth the company charted over just seven months shows the potential for false content to be created and quickly circulated. Even if one of these deepfake videos isn't very realistic looking ŌĆö at this point, plenty of them aren't ŌĆö it could still be good enough to influence many people's opinions.

"That is a fairly worrying threat for social media," Patrini said.

Deepfakes, which refer to a combination of the terms "deep learning" and "fake", use artificial intelligence to show people doing and saying something they didn't do or say. The medium is quite new: The first known videos, posted to Reddit in 2017, featured celebrities' faces swapped with those of porn stars.

Deeptrace's attempt to quantify the deepfake videos online may be unique; because the technology is nascent and the videos so dispersed around the Web, it can be hard to know where to look for them, and creators don't always mark altered videos as deepfakes.

On the face of it, Deeptrace's deepfake tally of less than 15,000 videos sounds like a pretty small figure, especially when you think of the countless number of videos online. Yet as the 2020 US presidential election approaches, politicians and government officials are worried about these kinds of videos being used to mislead voters. Companies such as Facebook (FB) and Google (GOOG) are so concerned about the possible spread of deepfakes on social media that they're creating their own sets of deepfake videos that they hope can be used to fight ones that pop up in the wild.

Patrini said Deeptrace is keeping track of deepfakes in order to understand the potential threat: things like the kinds of deepfakes that are being made and the places people are sharing them, as well as the places where tools (such as software or tutorials) for making them are being distributed.

"Doing modeling of your adversaries is, in a way, the first step for designing and developing a solution for the problem," he said. He echoed other deepfake experts in acknowledging that, like the ever-evolving field of cybersecurity at large, techniques for spotting deepfakes will have to keep changing as the technology behind it improves.

To count deepfakes, Deeptrace uses some automated tools to track online communities where people discuss and share deepfakes. These include popular sites such as Reddit and YouTube, where Patrini's team found "fairly innocuous" deepfakes (this video montage purporting to show Elon Musk as a baby, for instance, would fit into that category). Examples cited in the report include a popular deepfake of former President Barack Obama, which was intended as political satire, and another of Facebook CEO Mark Zuckerberg, which was created as part of an art installation.

The vast majority of the deepfakes Deeptrace found, however, come from pornography websites, and particularly ones dedicated to offering visitors deepfake pornography ŌĆö such as videos that feature a female celebrity or actress's face swapped onto another woman's body ŌĆö and supported by advertising.

According to Deeptrace's latest findings, more than 13,000 of the total number of deepfake videos were found on just nine deepfake-specific porn sites. Deeptrace found that eight of the top 10 pornography websites include some deepfakes in their content, too.

Patrini won't disclose names of these sites, saying he doesn't want to give them more attention. But he said that the ones focused on deepfake pornography started popping up last year, based on Deeptrace's checks of when the websites' domain names were registered.

Deeptrace also noticed a number of people and businesses that have started offering to make deepfakes for others. The report cited one service that claimed that it could make custom deepfakes in two days with 250 photos of the person that the buyer wants to see in the resulting video. Prices Deeptrace spotted were as low as $3 per video.

Deeptrace only surveyed websites on the English-language internet, but Patrini said it was surprised to see plenty of deepfakes made with celebrities from countries in Asia, too, mainly in pornographic deepfakes but in non-pornographic ones as well.

Sam Gregory, program director for nonprofit Witness, which works with human rights defenders, called the uptick in deepfakes that Deeptrace spotted a "troubling sign".

"It's not growing exponentially yet," he said. But, he added: "It's growing significantly."