For news organizations that would normally never show nudity of any kind, printing the photo of a naked Vietnamese girl screaming in pain and terror after a napalm attack was a no-brainer. In fact, they knew, it belonged on the front page. The New York Times put it there. The New York Daily News put it there. Several other American newspapers did as well.

Forty-five years later, the social network that can deliver news to nearly 2 billion people treated the same picture like it was child porn.

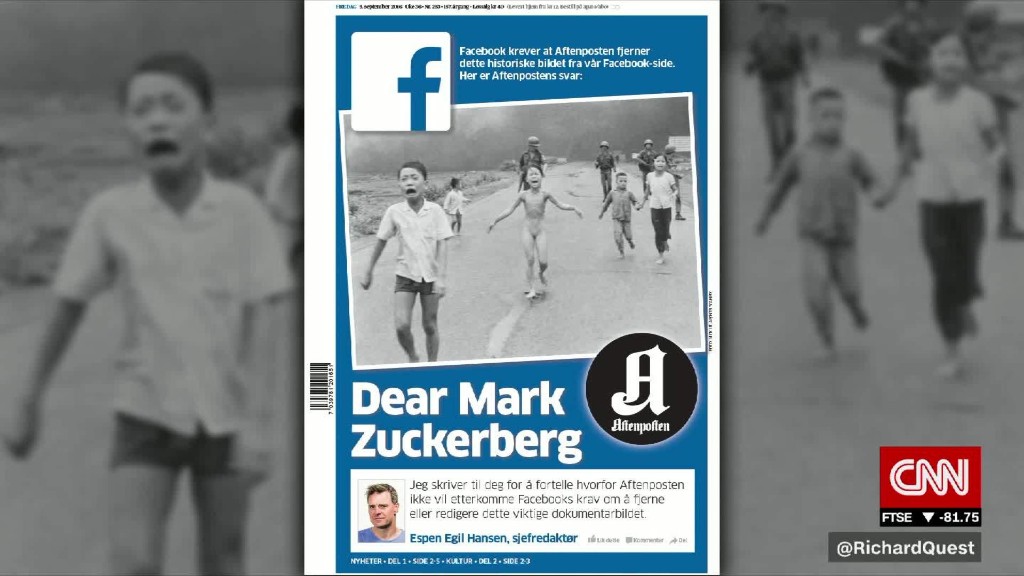

Facebook's decision to temporarily censor the iconic Vietnam War photo of Kim Phúc has set off alarm bells for journalism watchdogs and reignited the debate over the social media company's editorial responsibilities.

"This is a prime example of why Facebook does not want to be considered a media company, or -- God forbid! -- a news organization," Vivian Schiller, the longtime media executive who served for a time as head of news at Twitter, told CNNMoney in an email.

"Given that nearly half of the developed world uses Facebook as a source of news, it's a tough issue and will only get more challenging," said Schiller.

The latest controversy began after Espen Egil Hansen, the editor of the Norwegian newspaper Aftenposten, said he had received a demand from Facebook to remove the photo, which was in an article posted to Aftenposten's page. Within 24 hours, he said, Facebook removed the photo and the article itself.

The photograph, taken by Associated Press photojournalist Nick Ut in 1972, won a Pulitzer Prize and is one of the most memorable images of the 20th century. The AP itself initially resisted running the photo on its wire because it showed full frontal nudity -- which, as Ut once described it, was "an absolute no-no at the Associated Press in 1972." But after internal debate, Ut has said, the AP decided the photo's news value "overrode any reservations about nudity."

Facebook reinstated the photograph early Friday afternoon and issued a statement saying that the "the history and global importance of this image" outweighed its normal Community Standards, which forbid naked images of children.

But the statement did nothing to address larger questions about Facebook's editorial responsibilities -- or indeed about what would happen if the same kinds of questions came up about any other newsworthy photograph.

Despite hosting news content, and despite the fact that one recent survey showed that 44% of U.S. adults get news from the site, the social network has refused to define itself as a media company or accept any of the responsibilities that might come with that, including employing editors to exercise news judgment and curate content.

Mark Zuckerberg, the Facebook chairman, CEO and co-founder, is "the most influential editor-in-chief in the world," Hansen told CNNMoney's Nina Dos Santos on Friday. "With that follows a great responsibility. I ask him to think through what he is doing ... to the public debate all over the world."

In August, Facebook decided to remove editors for its Trending topics list and leave that influential tool in the hands of an algorithm. Since then, the list has promoted multiple false stories, including one which claimed that Fox News host Megyn Kelly was a "traitor" who had been kicked out of the network "for backing Hillary [Clinton]."

Even on Friday, as Facebook was dealing with the fallout from the Vietnam War photo, the Trending topics list was promoting an article from the Daily Star, a conspiracy theory tabloid, which claimed to have new footage showing that bombs had been planted in the World Trade towers on September 11, 2001.

For Schiller, the censorship of Ut's photo and the Trending topics controversies were indicative of a larger problem stemming from Facebook's reluctance to exercise editorial oversight.

"A tech company can have hard and fast rules like 'no nudity.' Period. A media company, especially a news organization, makes decisions based on nuances of news judgment, context, relevance, and the imperative to bear witness to atrocities like the one depicted here," Schiller said.

"Facebook is not staffed to make those kinds of decisions," she continued, "and given the challenges they've faced with seemingly less fraught efforts like trending, I can't imagine they will want to anytime soon."

Jeff Jarvis, the Director of the Tow-Knight Center for Entrepreneurial Journalism at CUNY's Graduate School of Journalism, and an advocate for a free and open Internet, has written about this issue several times.

"I've said it before and I'll say it again: Facebook needs an editor — to stop Facebook from editing," he wrote on Friday. "It needs someone to save Facebook from itself by bringing principles to the discussion of rules."

In an email, Jarvis said the problem in the case of the Ut photo was "one of procedure."

"The algorithm enforces a rule that we would all endorse: no pictures of nude children," he wrote. "There needs to be an appeal of such a rule-based decision to a reasonable and discriminating person."

In its statement on the matter Friday, Facebook said it would "adjust our review mechanisms to permit sharing of the image going forward."

"We are always looking to improve our policies to make sure they both promote free expression and keep our community safe, and we will be engaging with publishers and other members of our global community on these important questions going forward," the company said.